ML-EECBS: Learning a better cost-to-go heuristic for EECBS

Multi-Robot Planning and Coordination

Carnegie Mellon University

Pittsburgh, PA

February 2024 - April 2024

Multi-Agent Path Finding (MAPF), crucial for applications like automated warehouses, demands rapid computation for collision-free paths of multiple robots. Conflict-Based Search (CBS) stands as a state-of-the-art algorithm, iteratively detecting and resolving conflicts by dividing problems into subproblems.

Explicit Estimation CBS (EECBS) (developed by Li et. al.) is a bounded-suboptimal variant of CBS,that uses online learning to obtain inadmissible estimates of the cost of the solution of each high-level node and uses Explicit Estimation Search to choose which high-level node to expand next. EECBS was meant to combat the scalability issues faced by CBS, which is an optimal solver. It's key advantage is the inadmissible heuristic that guides the high-level search, which is based on a hand-crafted estimate of the "cost-to-go". For a detailed explanation of the EECBS methodology, refer to the work by Li et. al.

Instead of using this handcrafted equation to predict cost-to-go, ML-EECBS uses a lightweight ML model to learn and predict this heuristic more accurately during run-time, to better guide the high-level search, leading to fewer node expansion and thus speeding up this algorithm.

The true "cost-to-go" value can be obtained after a (optimal) solution is found. The true value of cost-to-go for a node is the difference in costs of the solution node and the current node.

The "cost-to-go" refers to the anticipated rise in path costs from the current node to the final solution node. This increase happens as new constraints are added to initially infeasible paths to make them feasible, resulting in longer (and therefore more expensive) paths.

However, since this true value cannot be known for a given MAPF instance before the solution is found, we cannot use the true cost-to-go value as a heuristic. Instead, we must somehow estimate this value during runtime. This is the idea used in EECBS, where a handcrafted equation is used to estimate the cost-to-go heuristic for a given node. However, as we can see in the graph below, this is not very accurate.

The graph shows the predicted vs actual values of the cost-to-go heauristic for the nodes expanded for a particular MAPF instance. As we can see the Predicted values are often very different than the actual values

Instead of a handcrafted formula, we opt to use a learned model to estimate this value during run time, under the assumption that this value can be represented as some complex unknown function of the various parameters of a given instance. Specifically, we use 6 features from each node, and expand them with pairwise products and division by number of agents to create a feature set of 47 features, which we then use to train our model. The model we chose is a Support Vector Regressor with a linear kernel. To collect training data for this model, we use a modified version of EECBS, that continues searching for solutions even after the first solution is found, in order to find one with lesser cost. This is done to improve the quality of our training data. For each node that is expanded using this method, the respective 47 features are calculated and eventually used to train the model. Further details regarding model training can be found in the report below.

We train this model on various MAPF sequences and test its generalizability to varied start and goal positions, varied number of agents, and varied maps.

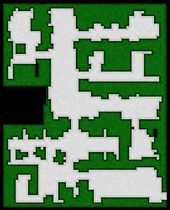

The SVR model was trained and tested on a total of 3 MAPF bench-marking maps, namely (from Left to Right) (i) empty-32-32, (ii) den312d, (iii) random-32-32

ML-EECBS demonstrated significantly higher accuracy in predicting the cost-to-go heuristic compared to the hand-crafted equation used in EECBS. This increased accuracy more than compensated for the computational overhead of making an SVR prediction at each node expansion during runtime, ultimately leading to a faster algorithm overall. The accuracy comparison is shown below:

The predictions of the SVR model and the EECBS formula on 2 instances of different maps. As we can see, the SVR model predictions (blue) were much more accurate than the EECBS formula predictions (green), resulting in an overall speedup of the algorithm.

MLEECBS solution of an instance of random-32-32 with 80 agents

Read the full report below: